In this final post on the analysis in

our paper on fish shoaling, I'll show how we can adapt the technique of fitting a function using a neural network, using this to isolate the various different cues that the fish respond to simultaneously.

In general we might imagine that a fish moving in a shoal is presented with an array of potential stimuli at any moment. Just for starters, it has the positions, movements and behaviours of its many neighbouring fish to consider. In addition to this there are other environmental cues, such as the positions of the walls of the fish tank in a laboratory experiment, or the possible locations of predators in the wild. We can expect that the behaviour of the focal fish at any moment will be due to a combination of all these effects (I'm intentionally avoiding the words 'sum' or 'product' for reasons that we'll soon see).

Now, in the

last post we learned how we can fit the behaviour of a focal fish as a function of any input stimuli that we choose. We saw an example using just the position of the nearest neighbour to predict the acceleration response of the focal fish. There is nothing in that approach to stop us instead using far more input stimuli. We could, for example, construct a neural network to predict the acceleration from the positions of the nearest 3 neighbours.

This would, in principle, allow us to learn how the acceleration of the focal fish depends, in potentially very complex ways, on the positions of its 3 nearest neighbours (NNs - don't get confused with neural networks!). However, such an approach has a few significant drawbacks. Firstly, from a technical viewpoint, the larger we make the space of possible inputs (i.e. the more stimuli we use), the harder it becomes to train the neural network. The more inputs we have the more combinations of those inputs that are possible. It becomes less and less likely that our data will cover a large enough proportion of those possible combinations to allow us to learn the connections between inputs and outputs.

Secondly, even if we could learn a function of the 3 positions (6 variables in total, each position being an angle and distance), how are we to 'see' what we have learnt? We may be able to try new combinations of the inputs and find the predicted acceleration, but it is going to be almost impossible for us to visualise the function.

Finally, how can we relate this back to previously established theories of collective motion? If we learn some highly complex function of the positions of all the neighbouring fish, what does that tell us about the simple rules of interaction that have previously been the standard way of understanding these phenomena?

We can get around these difficulties by considering the sort of interaction rules that have been suggested before. These have almost exclusively considered additive responses - the response of the fish to 2 neighbours is simply the sum of the response to each neighbour individually. This is akin to most effects in physics - the force on a spaceship is the sum of the gravitational force from the Earth and the gravitational force from the Moon, and the gravitational force from the Sun...etc. The existence of the Moon doesn't change the force exerted on the spaceship by the Earth (at a specific moment in time).

So we can propose a model where the acceleration of the focal fish is a function, f, of the positions of 3 neighbours (p1, p2, p3), this function itself being the sum (see why we avoided that word earlier) of 3 simpler functions, g1, g2, g3.

Acceleration = f(p1, p2, p3) = g1(p1) + g2(p2) + g3(p3) + residual

Now, just as we can model a function using a neural network, we can model 3 functions using 3 neural networks. The eventual acceleration is now the sum of the outputs from those 3 networks. I'm going to stop drawing each network properly and just treat them as black boxes, as I encouraged you to do in the

last post.

So the task of estimating one complicated function by learning one big neural network is now changed to the task of estimating 3 hopefully simpler functions using 3 smaller networks. The question is how to learn all 3. There is a choice to be made - do you try and learn all 3 simultaneously, or do you prioritise some over others.

In

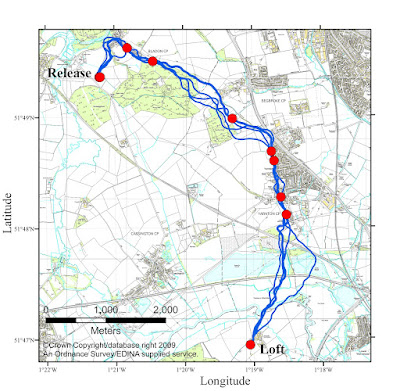

our paper we took both approaches for different subsets of the stimuli. We considered the position of the 3 nearest neighbours, but also the past behaviour of the focal fish and the position of the wall, give us a schematic as below.

Or, in equation form:

Acceleration = gpast(past) + gwall(wall) + g1(p1) + g2(p2) + g3(p3) + residual

We made two biologically motivated reasonings to decide how to approach learning this combination:

1. There is no reason to suspect, a priori, that the past behaviour of the fish, the position of the tank wall or the positions of the neighbouring fish are more or less important than each other

2. It is implausible to imagine that the focal fish interacts with its 2nd or 3rd nearest neighbours but not with the first nearest.

Therefore we will learn the first three networks (past, wall, NN1) simultaneously, since each of these should have the chance to be the primary factor in predicting acceleration. Networks 4 and 5 (NN2 and NN3) will be learned subsequently, using whatever part of the fishes' acceleration that has not been accurately predicted by the first 3 networks. This means, for instance, that the interaction between a fish and its second nearest neighbour will only be allowed to account for what cannot be predicted by its interaction with its second nearest neighbour.

The process of learning multiple networks, either simultaneously or in succession is relatively similar. We iteratively learn each network, while assuming that the others are already known. Lets look at how we learn the networks associated with the past, the wall and the nearest neighbour:

1. Start each network in a random configuration, just like when we learn a single network.

2. Now, we assume that the networks associated with the past and the wall are known and correct. We pass our measured values for these stimuli into the networks and record the output, getting a predicted acceleration_past+wall (recall the

USE function on the black box from the

last post)

3. We now learn the nearest neighbour network using our measured values of the nearest neighbour position as inputs, and the difference between the actual acceleration and the predicted acceleration as outputs

4. Once the NN1 network is learnt, we fix its state and then apply the same technique to learn the past network. Fixing the wall and NN1 networks in their current states we predict what the acceleration should be from the wall and nearest neighbour alone

5. Then, like in step 3, we now learn the past network, using the difference between the predicted and observed accelerations as the output

6. Having learnt the past network, we fix its new state, and we predict the acceleration from the past and the nearest neighbour networks

7. And now we learn the wall network, using the measured values of the wall position and the difference between the predicted acceleration and the observed acceleration

8. We can now test how good our 3 networks combined are at predicting the real acceleration by feeding the measured stimuli through all 3 in their current states.

9. Now we have learnt each of the first 3 networks once. However, we are not finished. From this point we go back to stage 2 and go through stages 2 to 8 again, repeating the whole process until the error between predicted acceleration and real acceleration stops improving.

So by this process we have learnt, simultaneously, the functions associated with the past, the wall and the nearest neighbour. The process of learning each network in this iterative fashion divides the observed accelerations into components associated with the different stimuli in a way that creates the best match between the measured values, assuming that the additive assumption is correct. It is of course possible that there are more complicated interactions that depend on, for example, the positon of the wall and the nearest neighbour in some complex non-additive fashion. We have found the best additive function that estimates the true process.

Now, having found these three networks, we are in position to successively learn the interactions with the second and third neighbours. Remember that we do these after the first 3 since it is hard to imagine a fish consistently ignoring its nearest neighbour while attending to its second nearest neighbour. As such we only learn each of these once, rather than iterating.

10. Group all 3 of the networks we learned before and predict the acceleration

11. Now learn the second nearest neighbour network (NN2) based on the difference between this prediction and the observed acceleration

12. Now group all 4 of the networks we have learnt so far and predict the acceleration

13. Learn the 3rd nearest neighbour network based on the difference between these predictions and the observed accelerations

14. Phew! Now we have finally learnt every network. We can make predictions based on all 5 networks to test how well the combination predicts the real accelerations

15. But nicely, since each network has only 1 or 2 inputs, we can also input the range of possible values for each input into its respective network and plot the output, enabling us to visualise each component and solving our earlier problem with visualising a high dimensional function. When we do so, we get something that looks a lot like the figure below. The results from the past network are not shown, only those from the wall and the 3 neighbours (from top to bottom respectively). On the left hand side we have the predicted accelerations from each of these networks. On the right hand side we also have the predicted turning angle, using the same process but with measured turning angles instead of accelerations. Recall that each plot is a semicircle because the funtions are assumed to be symmetric (acceleration) or anti-symmetric (turning angle)

As we saw in the

last post, the interaction with the nearest neighbour replicates many of the features we expect, including distance dependent repulsion and attraction between the two fish. The other interesting result here is how little structure there is for the functions associated with the second and third nearest neighbours. In

our paper we interpret this as evidence that the fish primarily interact with their first neighbour only. Such a strong biological interpretation comes with necessary mathematical caveats. It is important to be clear that we have only learnt the best function for mapping stimuli to behaviour

out of those which fit the additive structure we proposed. As shown in a

another paper published alongside ours, interactions may not always be additive. Also, technically what we have shown is that the positions of the second and third neighbours do not help us to predict the behaviour once we known the position of the first nearest neighbour. While this suggests that no interaction takes place these are subtly different statements.

Despite those final caveats, learning combined functions in this manner is a powerful tool for separating effects in the data, and for learning patterns that may be obscured by other unknown factors (such as the response to the walls of the tank here). I hope you find this useful when considering your own data!